Introducing Grazer: Our Open Sourced Algorithm Engine

At their code, Bluesky is building their platform, and the ATProto it rides on top of, to be a billionaire proof ecosystem. To do that, it means having a rock solid commitment to open sourcing significant portions of the work, and radically decentralizing and delegating power to users. We think they're on to something great here, and believe that to build a more sustainable, less enshittified social web, these commitments have to reverberate through the rest of the ecosystem.

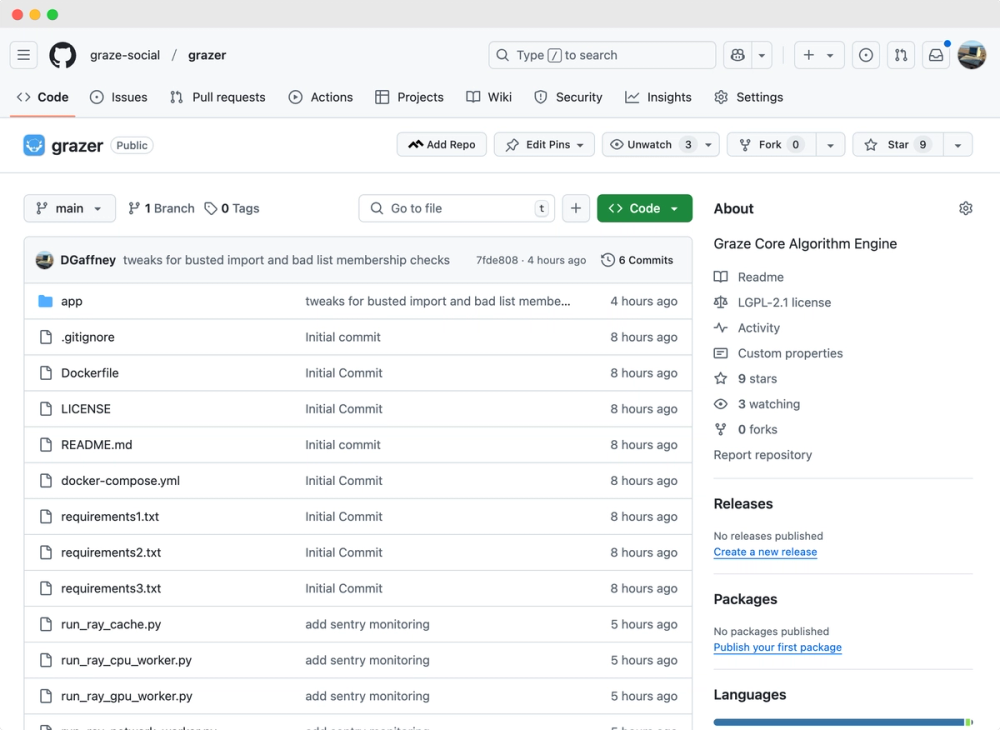

To that end, we're excited to open source Grazer, the core intellectual output we've been working on at Graze. Grazer is a Python/Ray based distributed system for ingesting the jetstream as input, pulling a set of algorithm manifests from a known Redis key (although our intent is for individual practitioners to modify that portion to fit their own needs), then colliding them against the jetstream batch as quickly as possible.

Algorithms in Graze can be any combination of CPU (e.g. regex), GPU (e.g. ML inference), or IO heavy (i.e. external lists of users from starter packs). These costs can, by definition, occur at any time during execution, so being able to fan out to dedicated lanes for these loads is essential. In our Ray folder, we delegate dedicated lanes for specific CPU, GPU, and IO heavy operations (and expect to continue in that direction as we continue to develop the work).

Additionally, we can often detect situations in which any further execution for a given algorithm for a given record would be needless (as it's already a clearly rejected case within a particular clause), so being able to quit processing early on such records is vitally important. Our LogicEvaluator describes that process as well as general sense-making of Algorithm Manifests

Another perhaps generally useful tool is our backfilling strategy based off the Jetstream. Currently you can't go backwards in the Jetstream, so we run a process of scanning small portions of the Jetstream records, selecting matches, then reversing them to return a backwards-calculating pubsub of content for a given algorithm.

We'll certainly keep iterating on our core engine, but wanted to give it some sunlight as quickly as was reasonable. We look forward to working with the community to build a great core engine we can *all* use for algorithmic filtering into the future!